Games & Tools ( & Experiences)

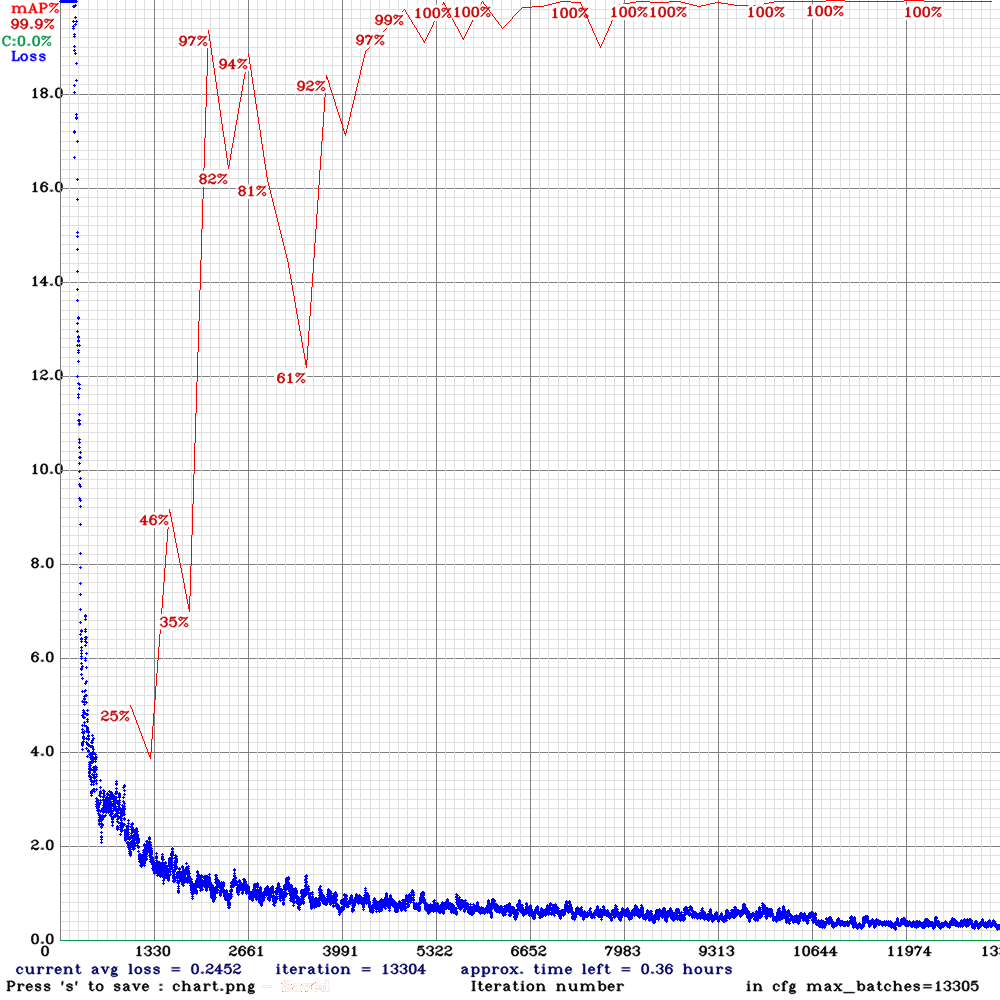

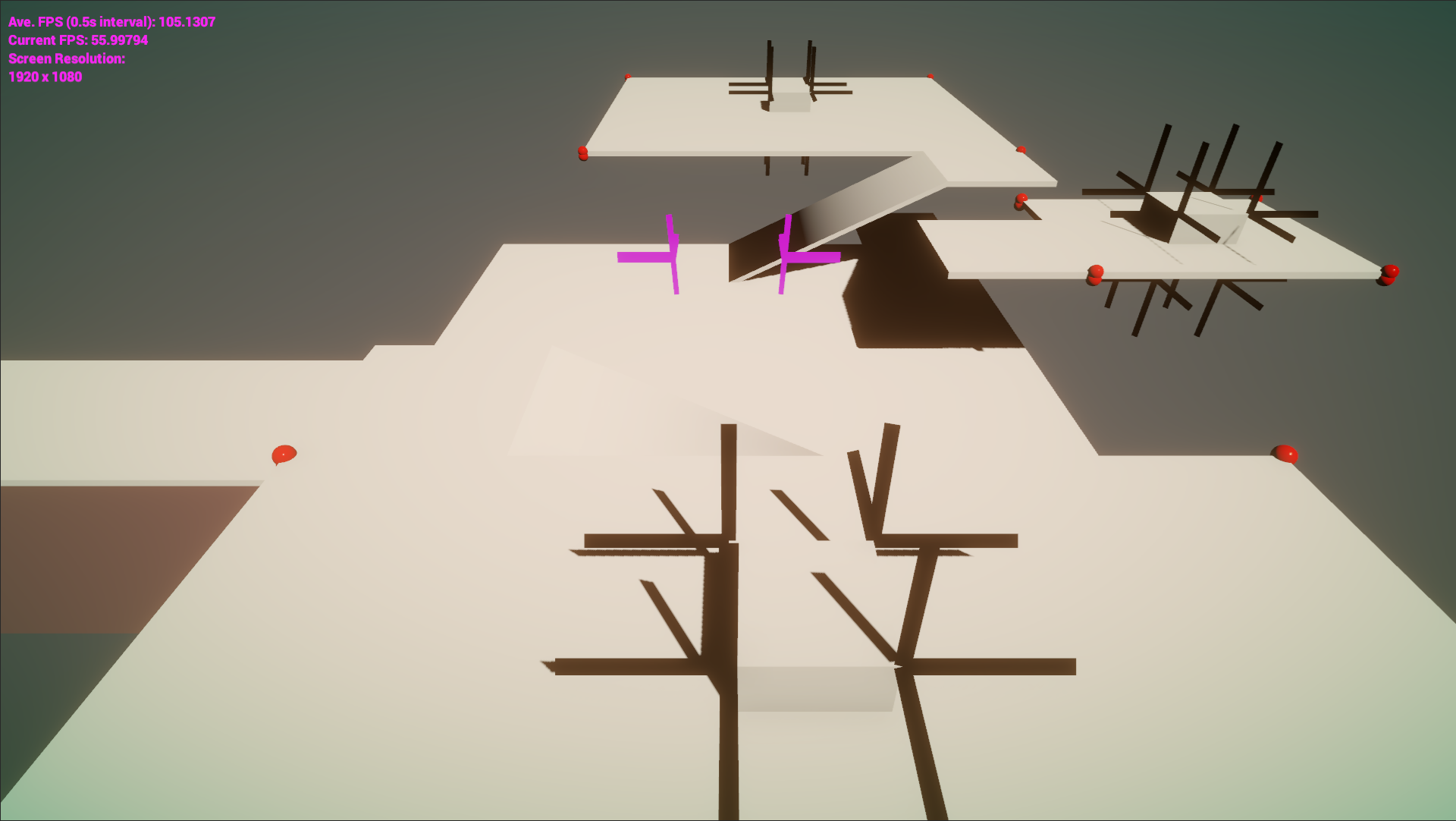

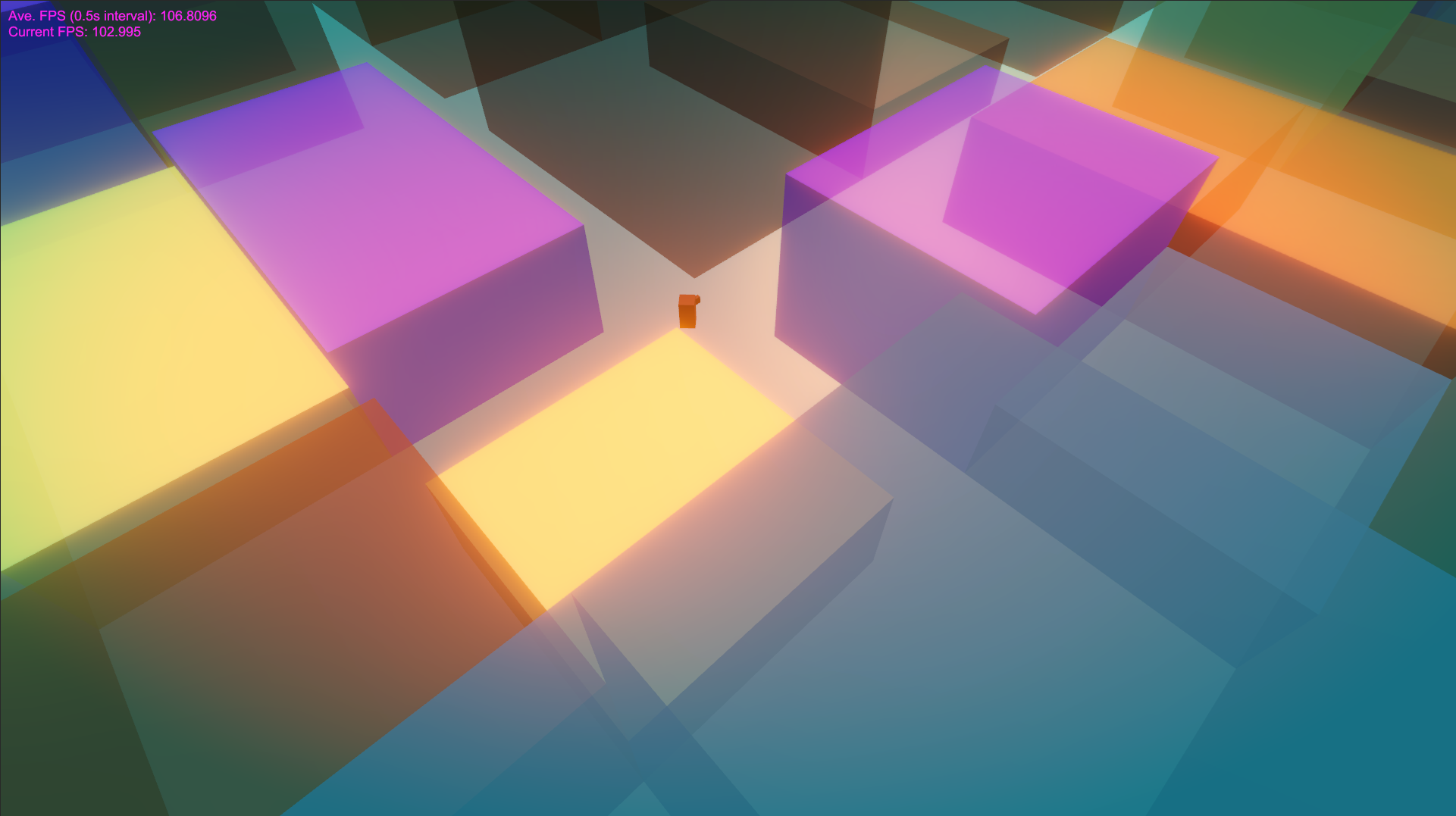

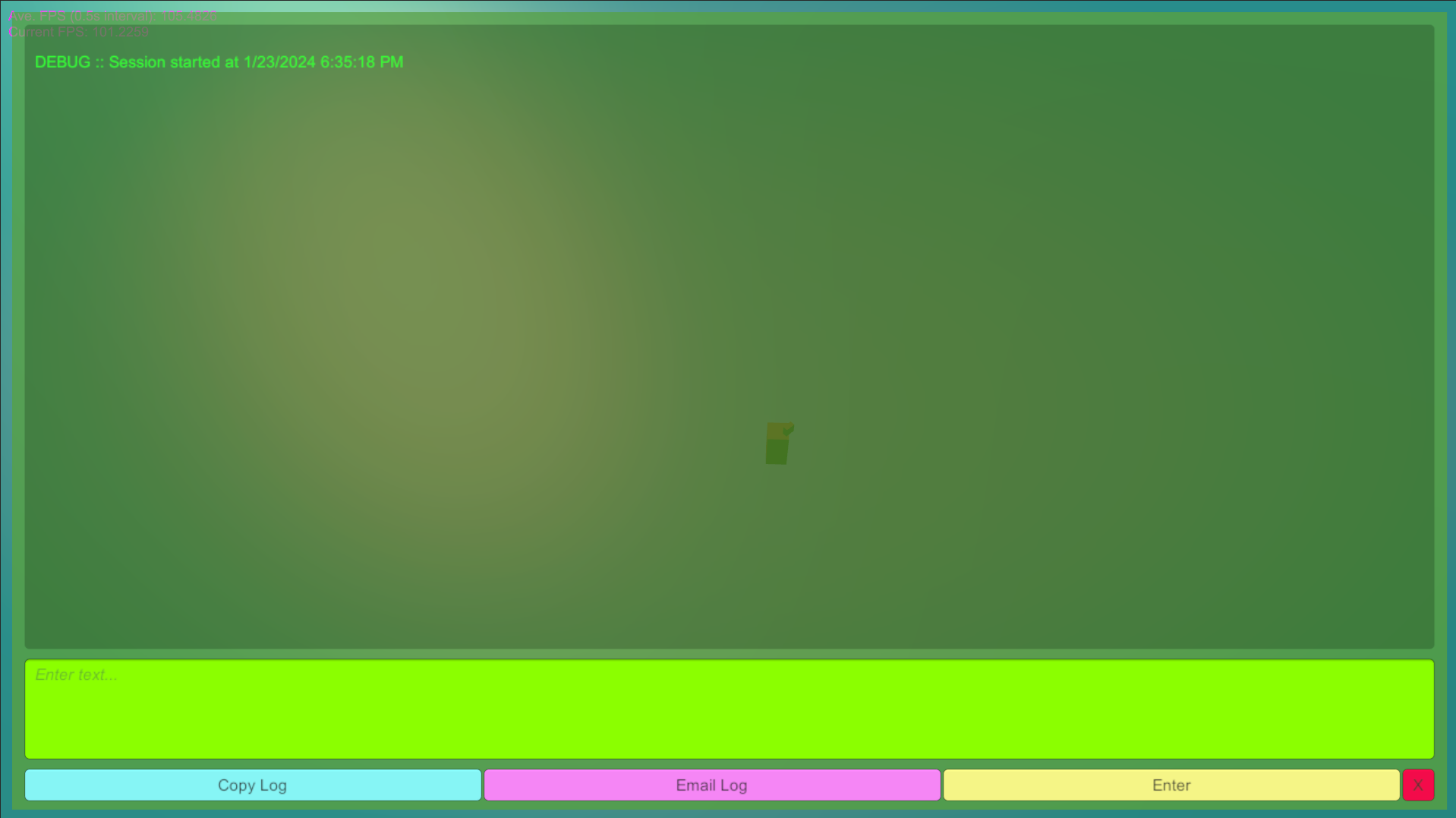

DroidknotStress & Satisfaction (2024-present)

Description: A simple game with a satisfying core mechanic like some of the mid-core/cozy casual games I play in between more serious games. Envisioned as a mash-up between the mechanics of Bad North (PS5) and a restaurant theme inspired by playing Traveller's Rest (PC). We would describe the genre as cosy-casual, roguelike, real-time tactics restaurant management.

Status:WIP

Engine/Language(s)/Libraries:Unity/C#

Target Platform(s):Mobile (Android), PC

Role:Indie developer

Notable Contributions (so far):

- Procedural generation of levels using generated meshes and primitives

- Development visualization

- Integrated the KitchenSink library to issue debug commands for generating levels

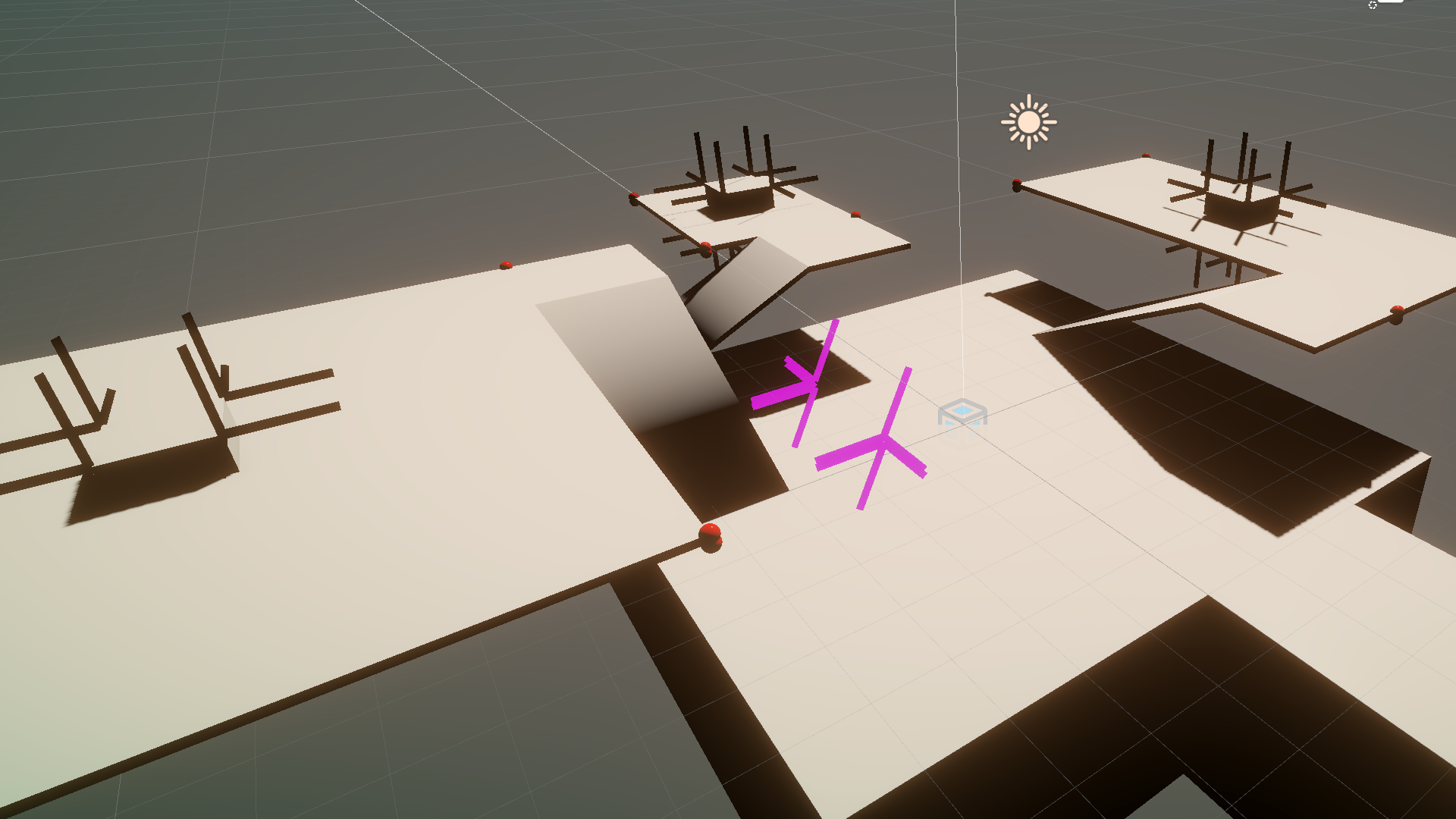

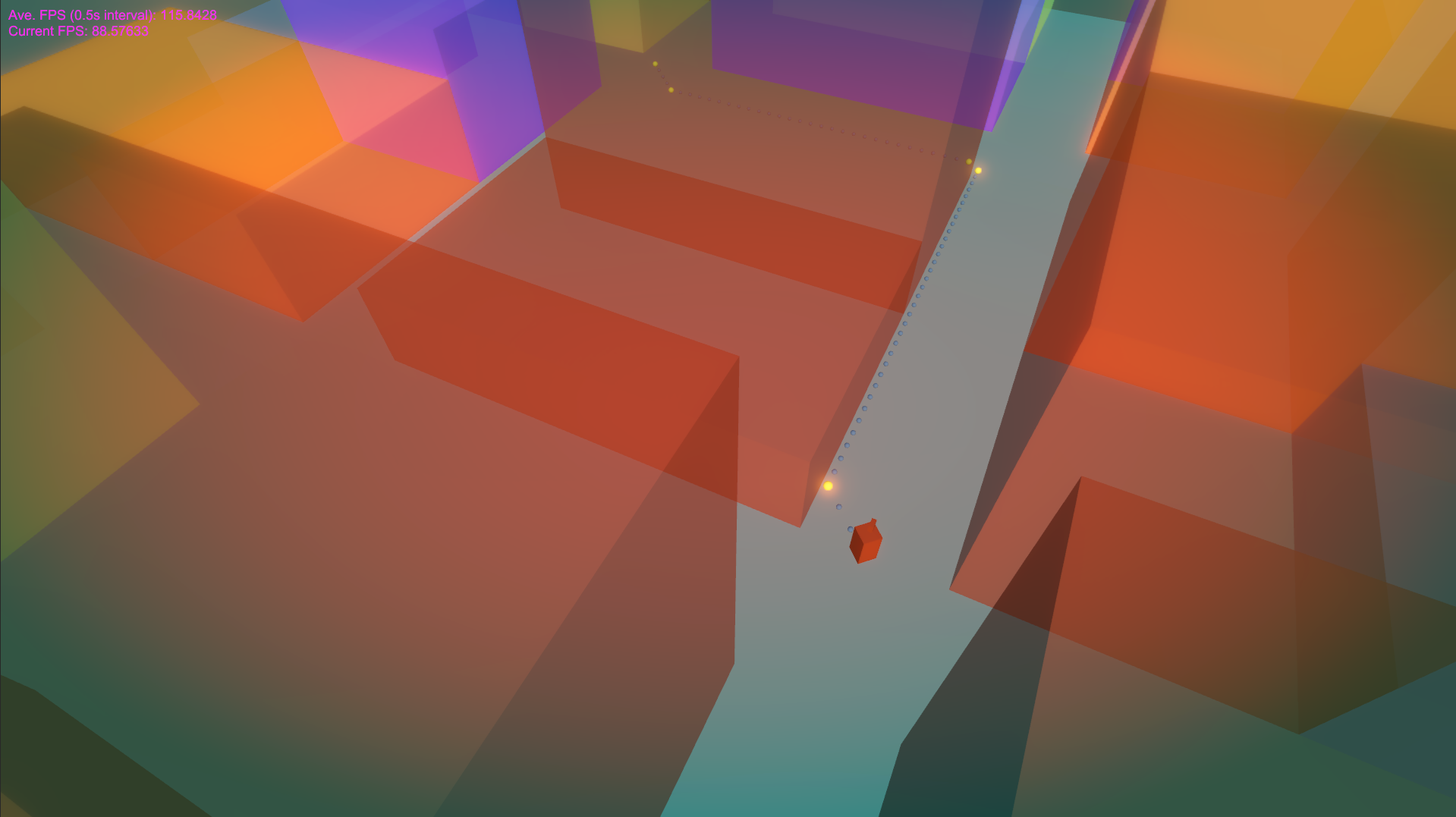

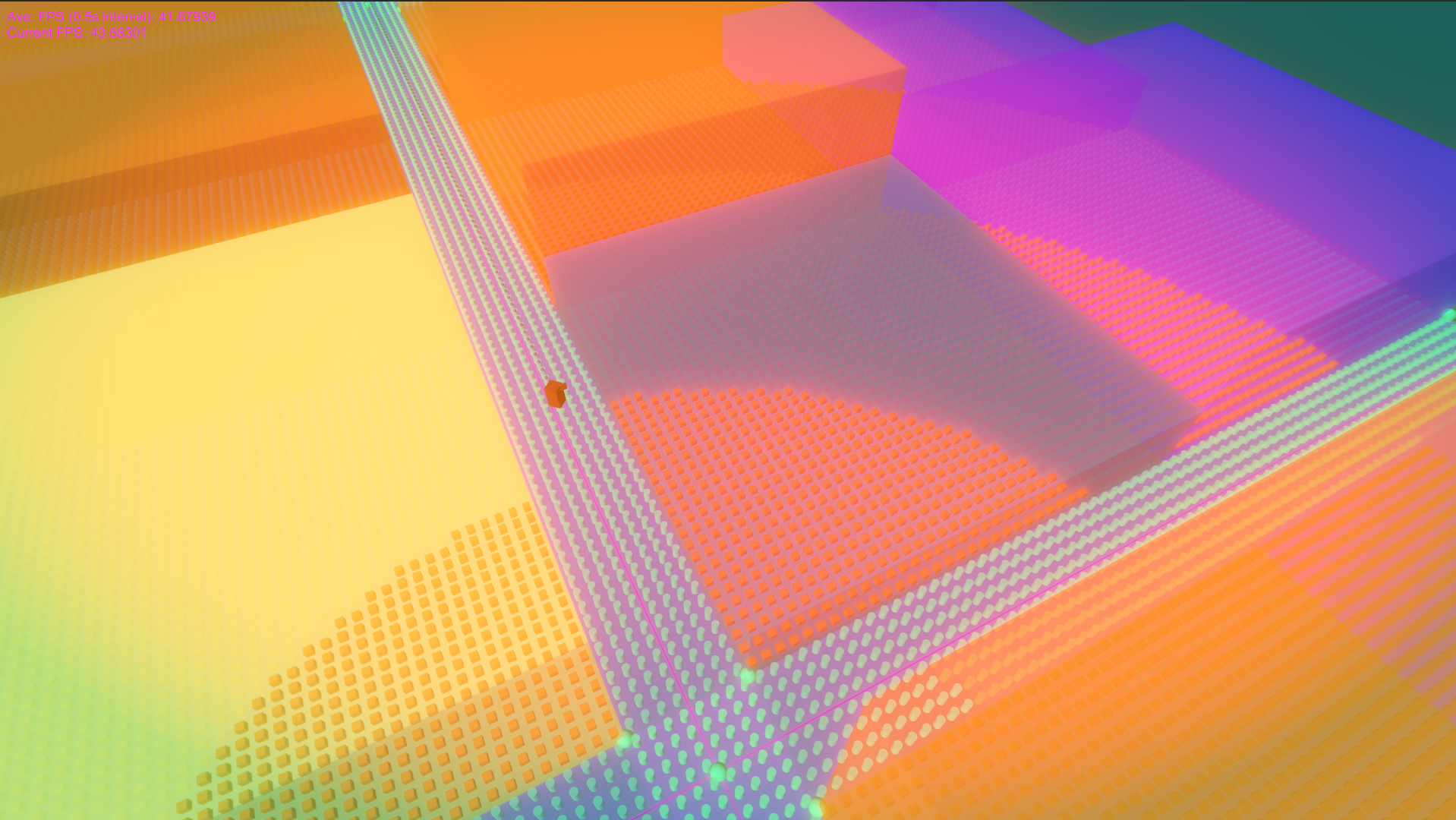

PersonalPathfinding Sandbox (2024-present)

Description: I hadn't hand-written pathfinding in a while, opting to use Unity's built-in Navmesh, which is going to be more efficient in most cases. For fun, I implemented A* in Unity. I also experimented with some optimization tricks I learned in the Udacity Flying Cars Nanodegree.

Status:Posted to github

Engine/Language(s)/Libraries:Unity/C#

Target Platform(s):PC

Role:Author

Notable Contributions:

- Implemented A* from scratch for Unity in C#

- Ported a python implemention of collinearity pruning to C#

- Ported a python implemention of Bresenham's pruning to C#

- Provided smoothing with SQUAD splines

- Implemented basic camera controls.

- Development visualization

- Integrated the KitchenSink library to issue debug commands for generating levels

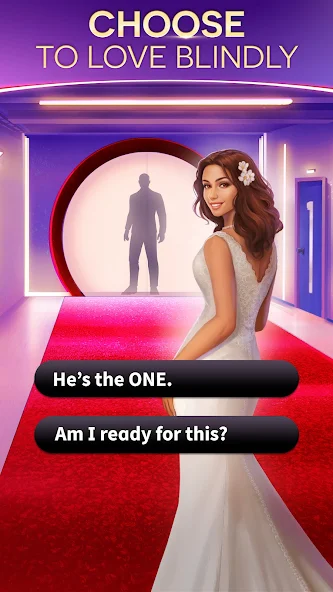

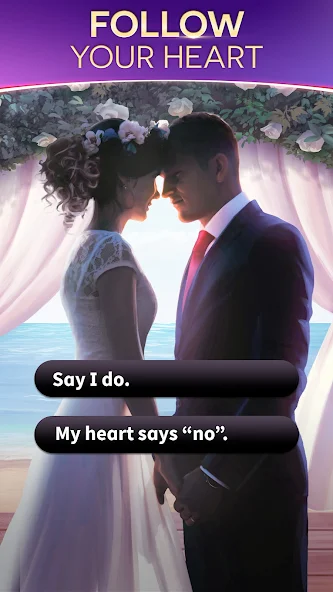

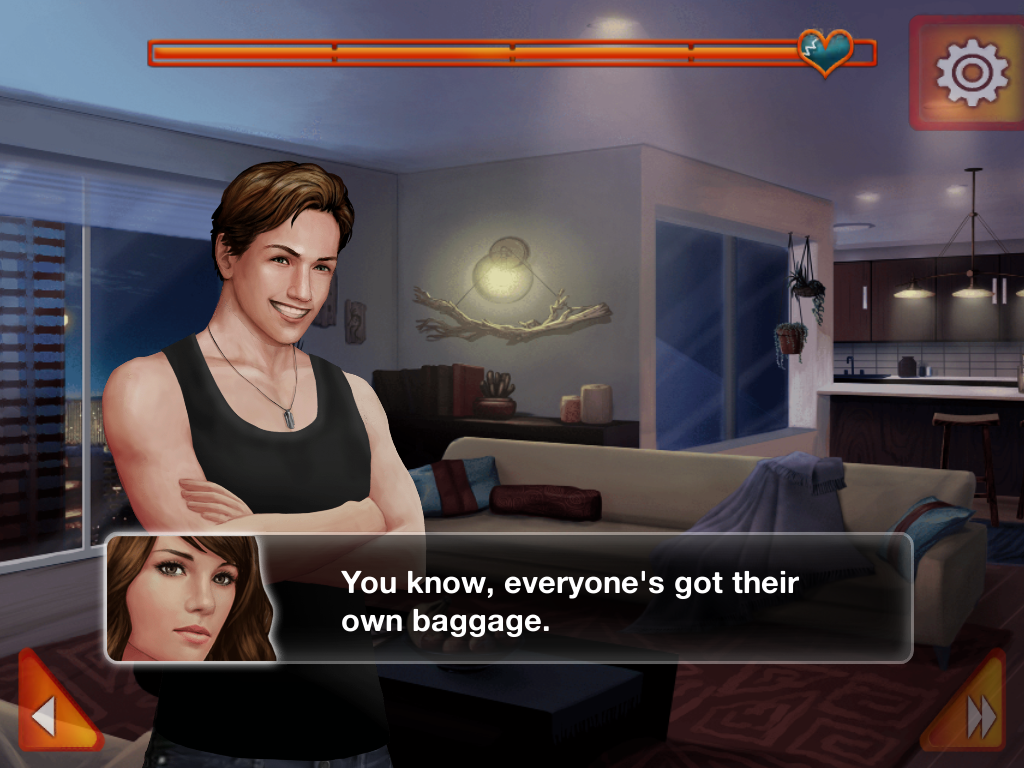

NetflixNetflix Stories: Love is Blind (2023)

Description: A visual romance adventure novel based on the popular eponymous Netflix show.

Status:Released in 2023 on Google Play and the Apple App Store (500K+ downloads on Android)

Engine/Language(s)/Libraries:Unity/C#/BFML/AppsFlyer/Febucci Text Animator

Target Platform(s):Mobile (Android/iOS)

Role:Full-Time Contingent Talent (Software Engineer, Game Systems)

Notable Contributions:

- Integrated AppsFlyer SDK for telemetry tracking of player activity. This was true integration that required authoring novel code in a highly technical codebase to implement custom tracking features, not just SDK installation. I coordinated with user acquisition and product teams at Netflix to determine which calls were feasible within the project scope:

- Regularity tracking (days 4-7 of consecutive play, resetting after 7)

- Tracking total reading time in 10 minute intervals, taking into account pausing, backgrounding and idling

- First login

- Various chapter and season start and completion metrics

- For both iOS and Android

- Developed tools for the in-game debug panel for testing the integration

- Local Android build testing in Android Studio

- Kicked off and validated builds via Jenkins

- Coordinated with SDETs who performed the official testing and sign-off of the features

- Bugfix in support of the release

- Tool refactors in support of production for future titles to be featured in the same hub application

- Implementation of native features like native app review

- Documented features and authored dev guides on an internal Confluence site

- Performed deep profiling of the latest version of the Febucci Text Animator plugin on a stream to verify a reduction in Main.GC() calls before copying it up to the main stream to improve performace

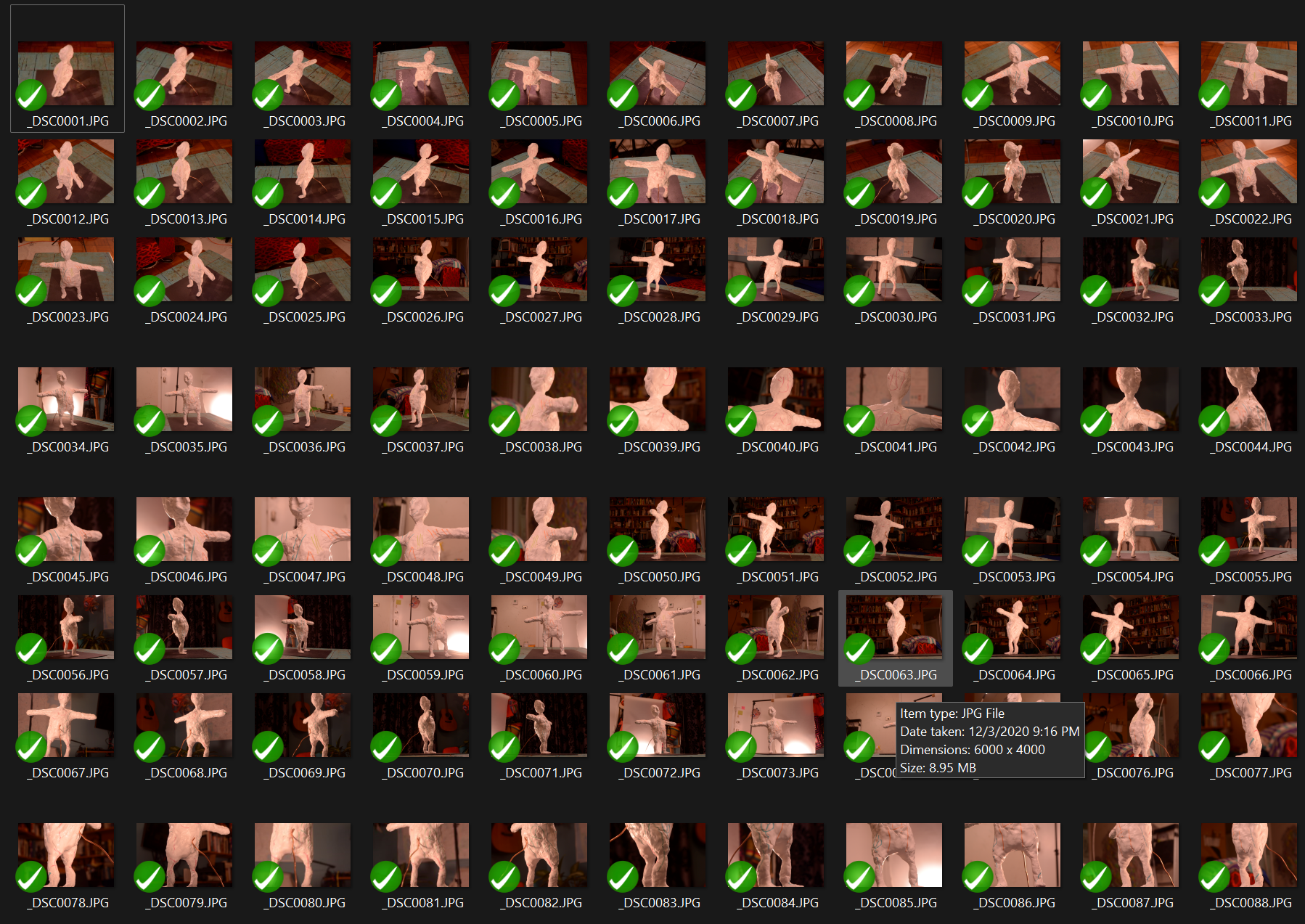

DroidknotHiveliminal (2020-present)

Description: A walking RPG exploring what it's like to be neurodivergent and having to mask in neurotypical world.

Status:WIP

Engine/Language(s)/Libraries:Unity/C#/KitchenSink

Target Platform(s):PC

Role:Indie developer

Notable Contributions:

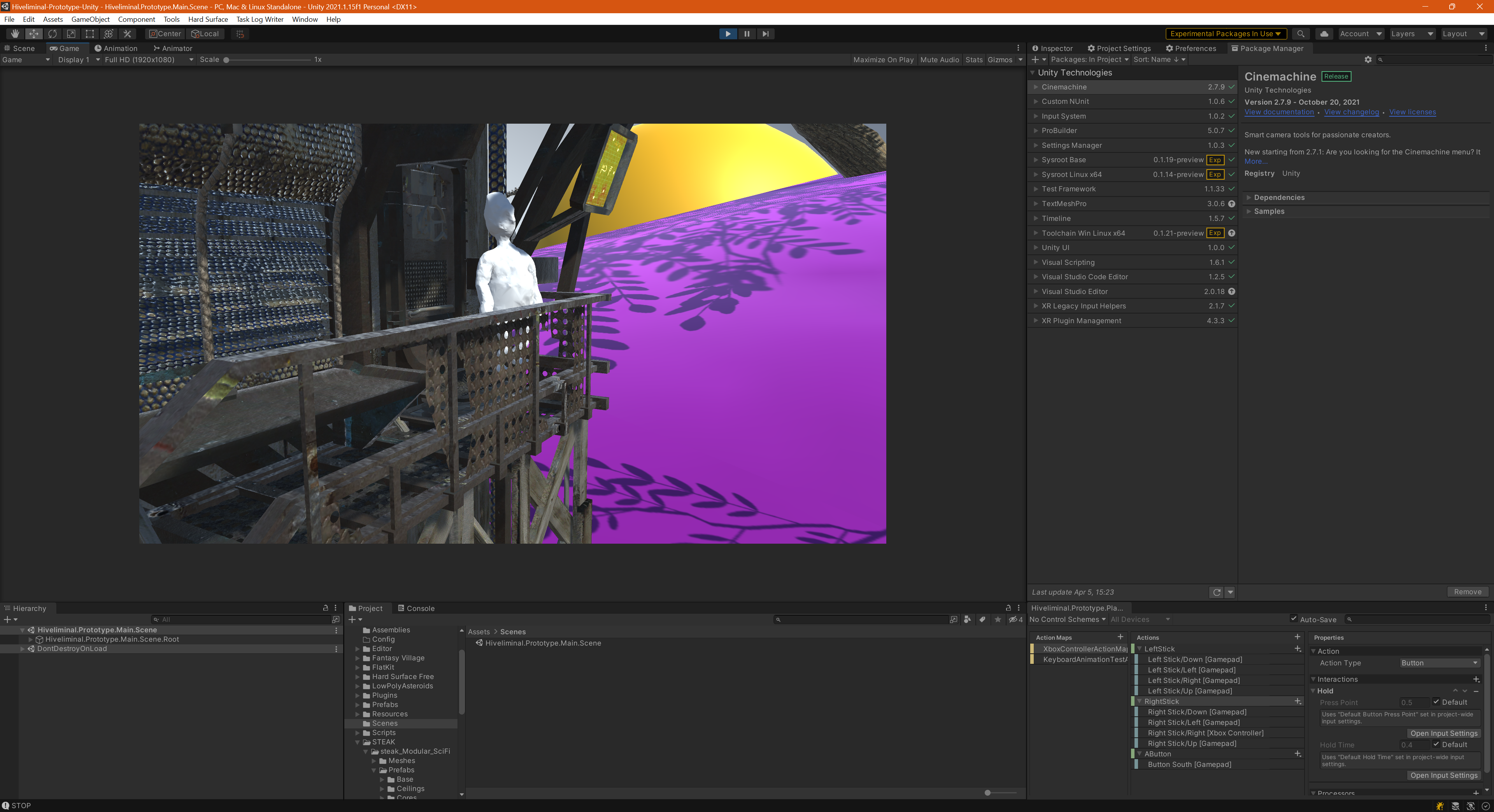

- Implemented camera and camera controls

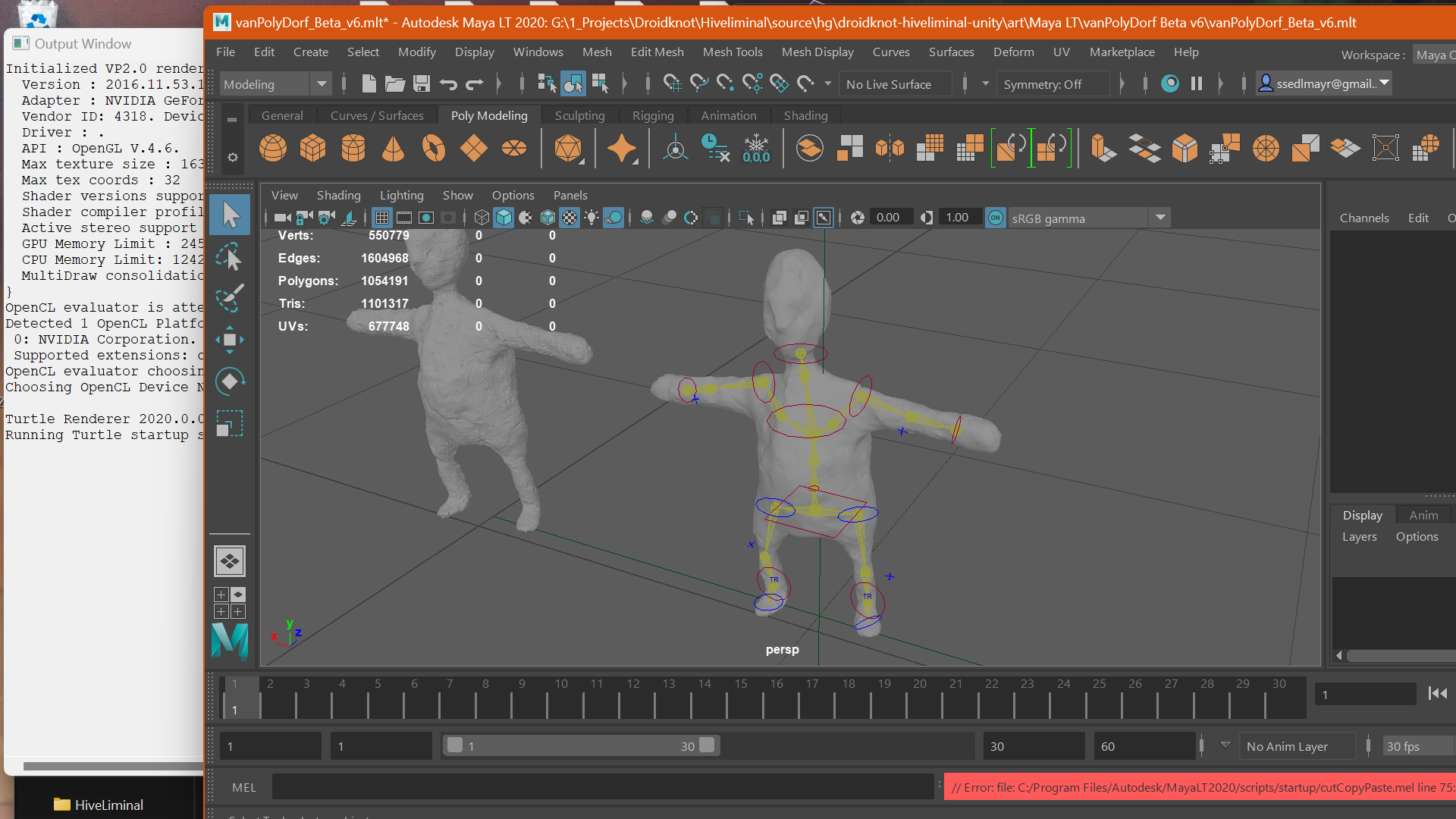

- Starting from a hand-sculpted model created by my spouse and collaborator M Eifler:

- Marked up the model to make features easier to parse for photogrammetry

- Captured photos for photogrammetry

- Used Meshroom to process the photos into a workable model

- Closed holes in the model in Maya

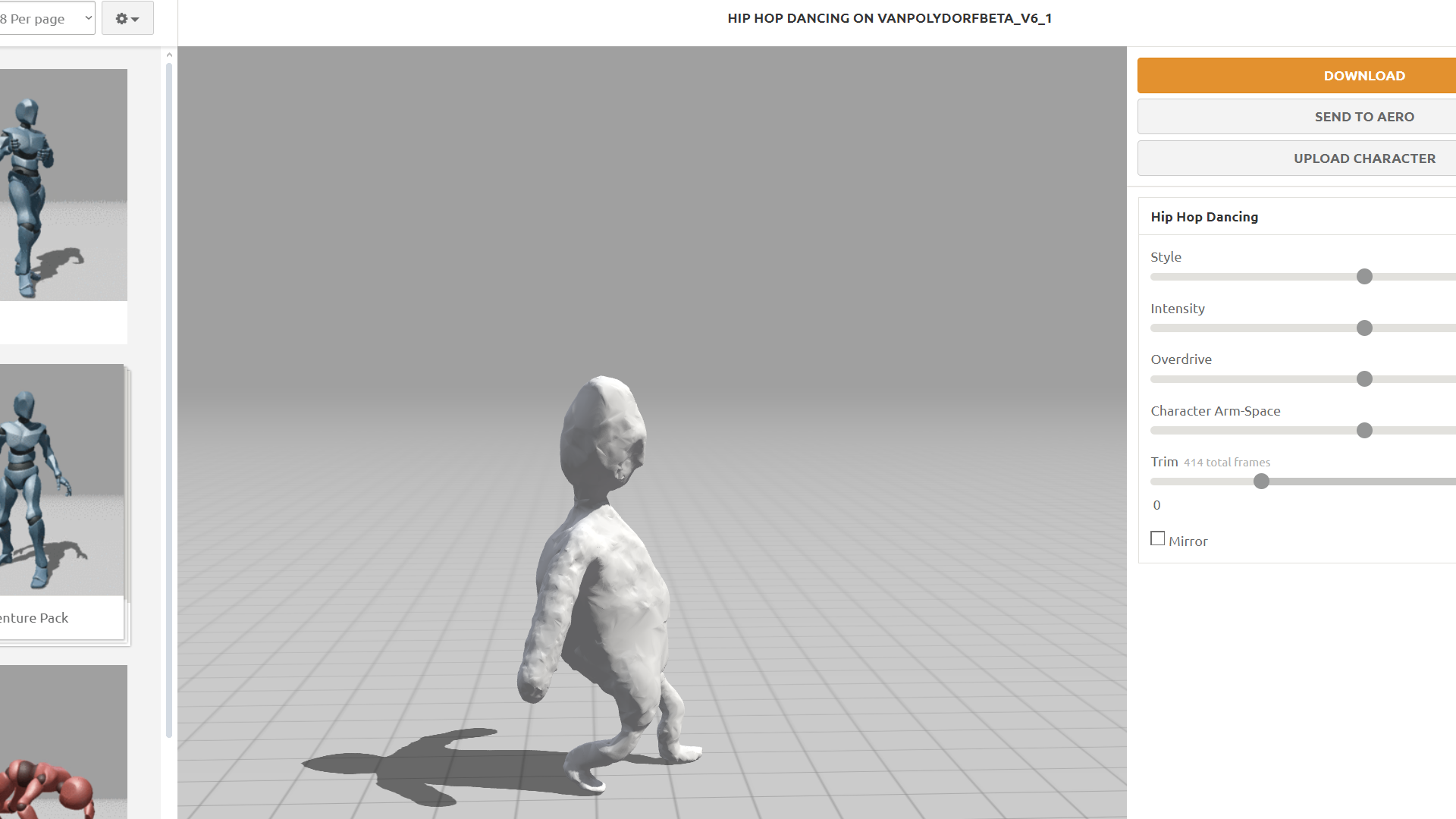

- Uploaded and auto-rigged the model with Mixamo

- Downloaded animations from Mixamo and imported the model and animations into Unity

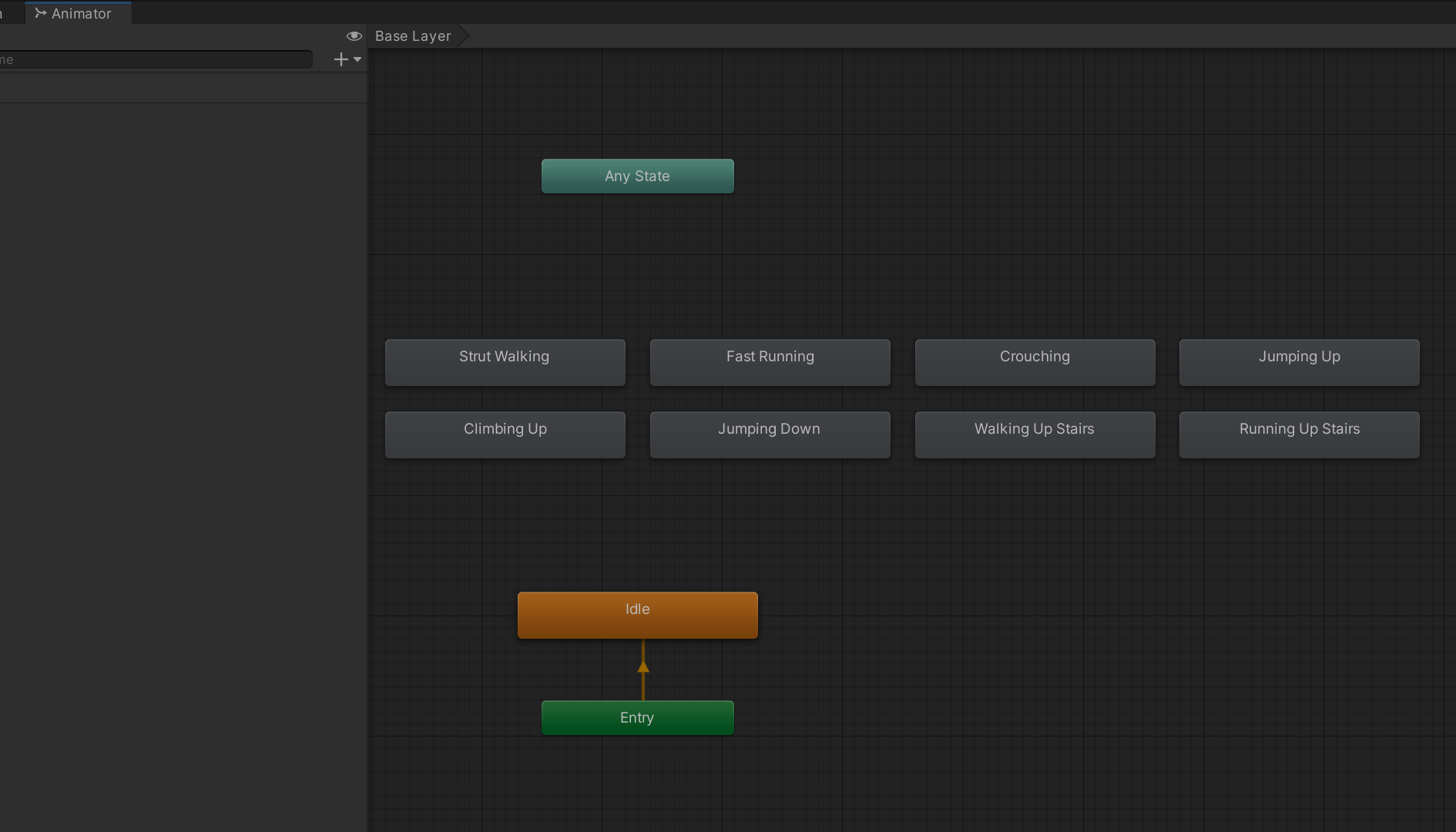

- Created an animations state machine for the player character

- Added an XBox controller scheme to control the player character

- Created a prototype scene with some Asset Store art to test character-related mechanics

- Background audio streaming

NomadicVR Panopticon (2018)

Description: A behavior tree agent to optimize foot traffic in a controlled VR arcade space and prevent physical collisions between players wearing headsets in the era before pass-through was a thing.

Status:Company closed in 2020

Engine/Language(s)/Libraries:Unity/C#

Target Platform(s):PC, VR (Oculus Rift)

Role:FTE (Senior Gameplay Engineer)

Notable Contributions:

- Integrated a behavior tree system I wrote into Nomadic's codebase

- Defined custom composite nodes to slow down or speed up a player's movement (which I called "nag" and "drag" nodes), time interactions, and detect player collisions in the virtual player space based on their positional data synced with Optitrack

- Defined test data in JSON to drive a prototype interaction in the virtual booth space for Arizona Sunshine's Nomadic VR experience as shown in the video above

- Coordinated directly with the producers to identify their future needs: the eventual goal as I envisioned it was a suite of editors, one on desktop, and a runtime editor for use in VR, to allow producers to prototype and define experiences without recompiling the app or involving an engineer in any way

NomadicArizona Sunshine: Rampage (2018)

Description: A VR arcade experience based on the popular indie game Arizona Sunshine.

Status:Released in 2018

Engine/Language(s)/Libraries:Unity/C#

Target Platform(s):PC, VR (Oculus Rift)

Role:FTE (Senior Gameplay Engineer)

Notable Contributions:

- As SDK engineer, broke code out from Nomadic's 'Chicago' prototype into an assembly that could be reused across experiences which became the basis for the Nomadic SDK

- Defined key code smells and vulnerabilities in the Chicago prototype to be refactored into more optimal, sustainable and reusable code

- Coordinated with third party vendors in Chile to perform refactors under my advice to the Platform Engineer and Director of Sotware

- In support of our partner, Vertigo Games, coordinated with the Director of Software and the props and firmware teams to test props in the Nomadic experience booth

- Provided SDK support to Vertigo Games

- Performed temporary refactors in a separate Perforce branch in support of my separate work on the Panopticon agent in support of the production team

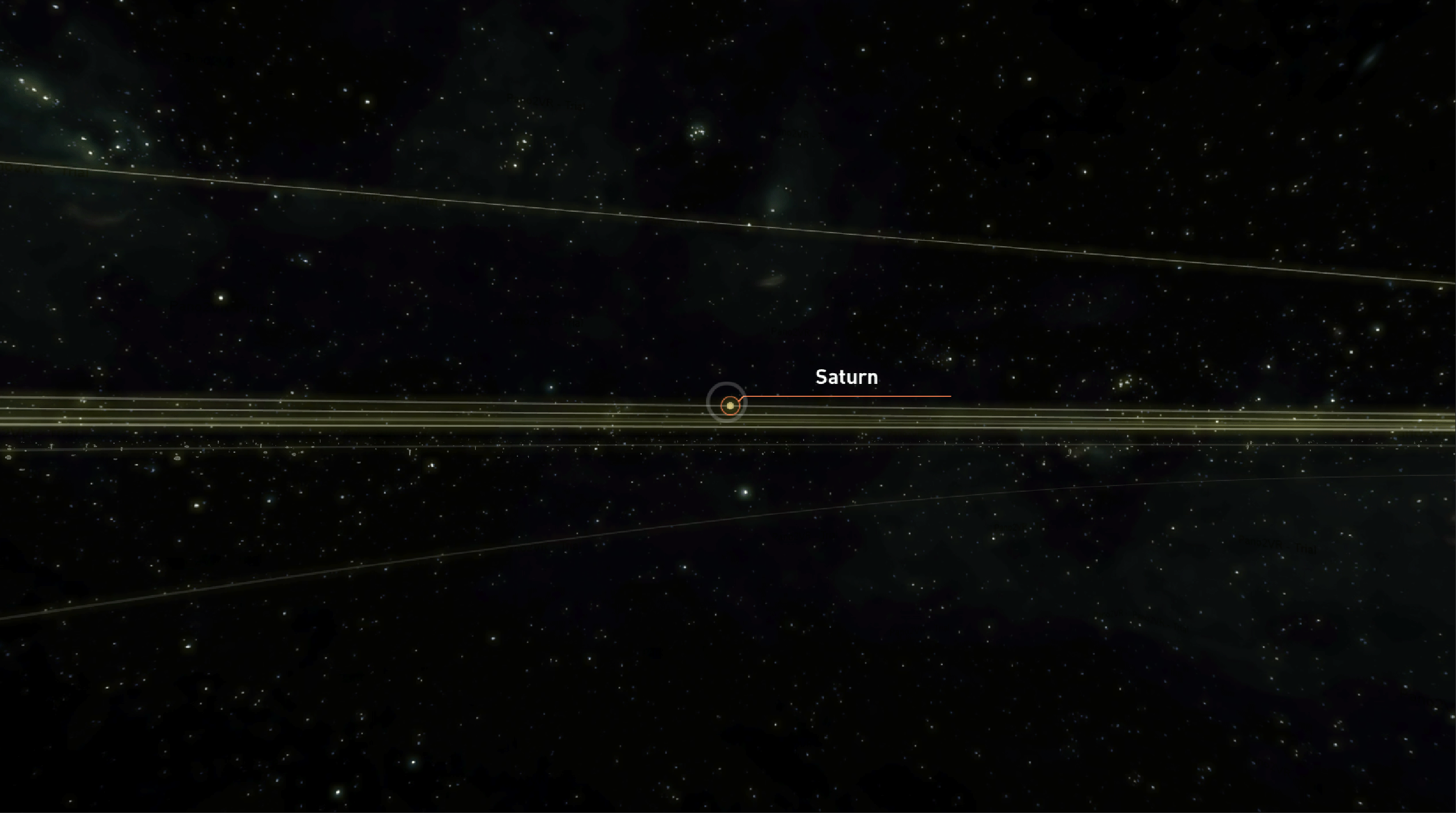

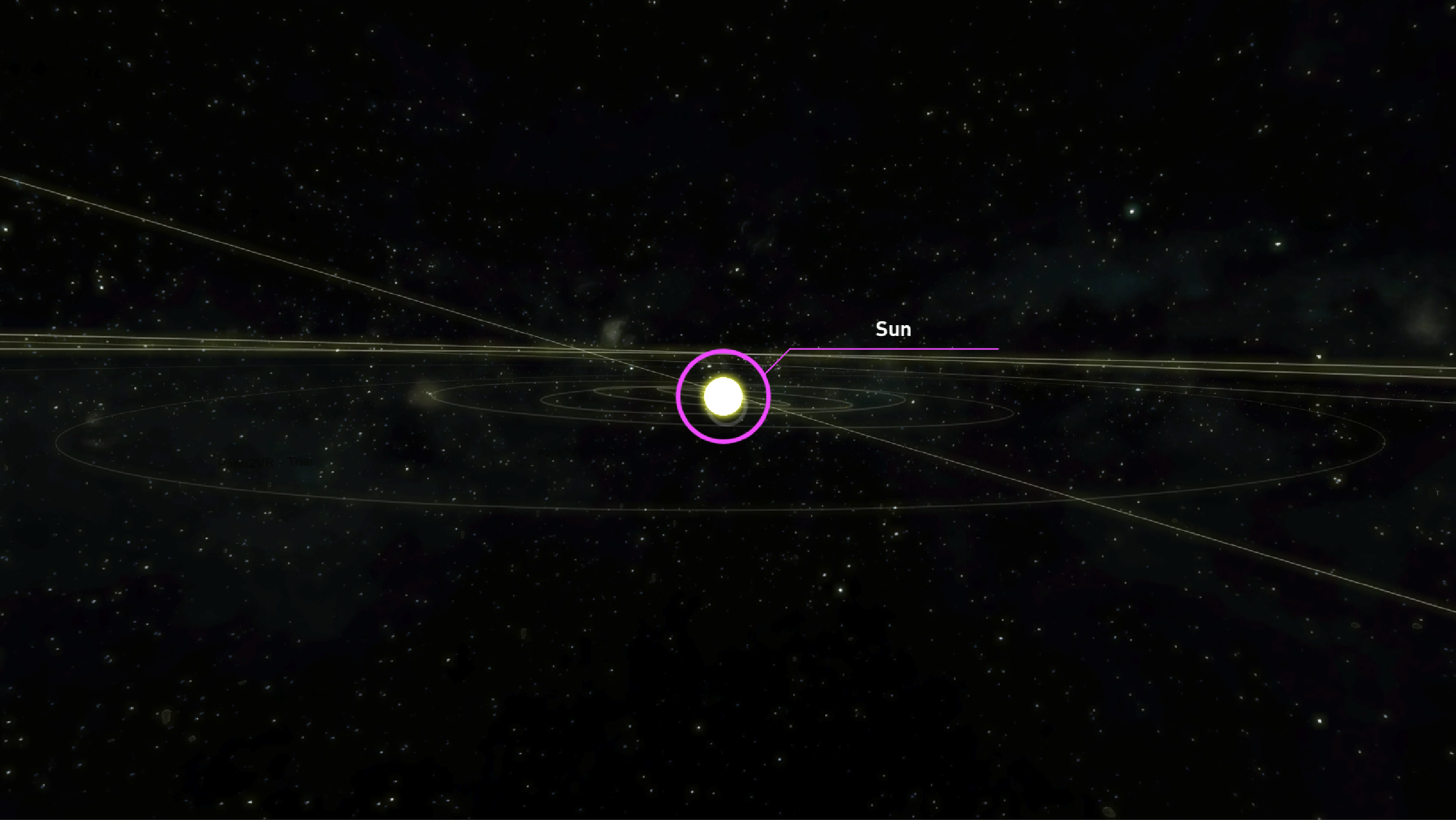

Y-Combinator ResearchSolar (2017)

Description: A miniature solar system simulator experience exploring spherical video in VR.

Status:Partially completed, project cancelled

Engine/Language(s)/Libraries:Unity/C#

Target Platform(s):PC, VR (HTC Vive)

Role:Pro bono research fellow

Notable Contributions:

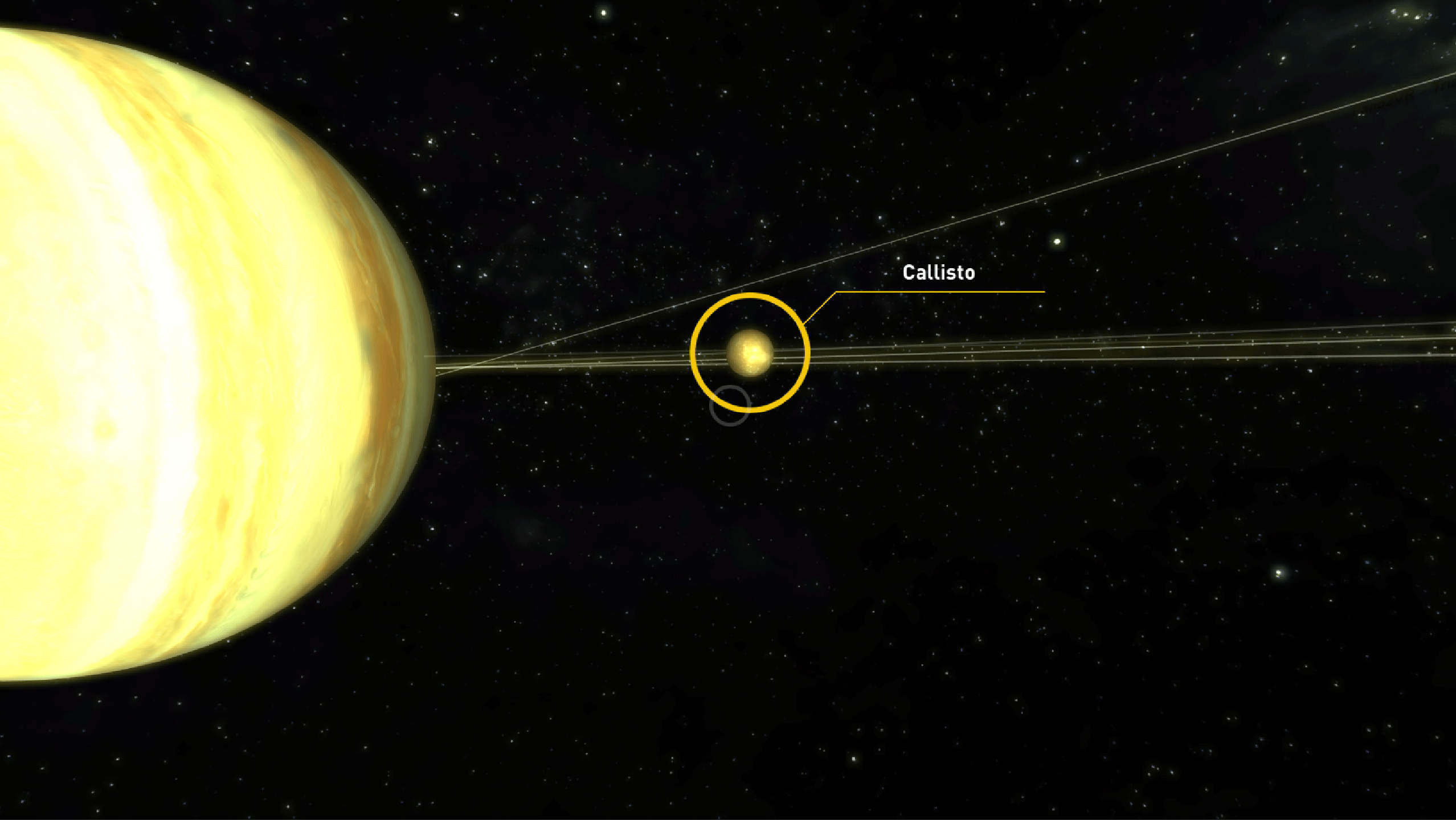

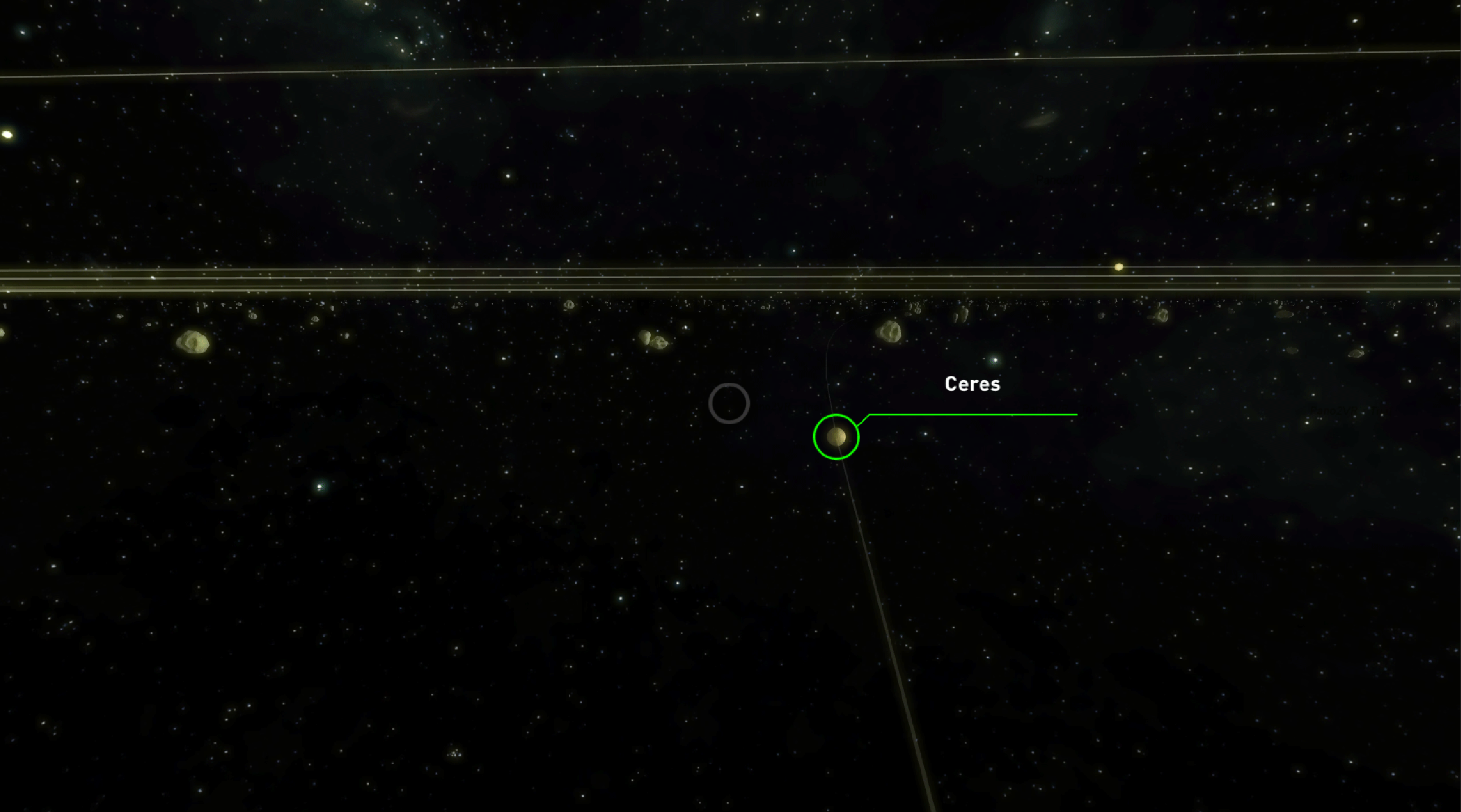

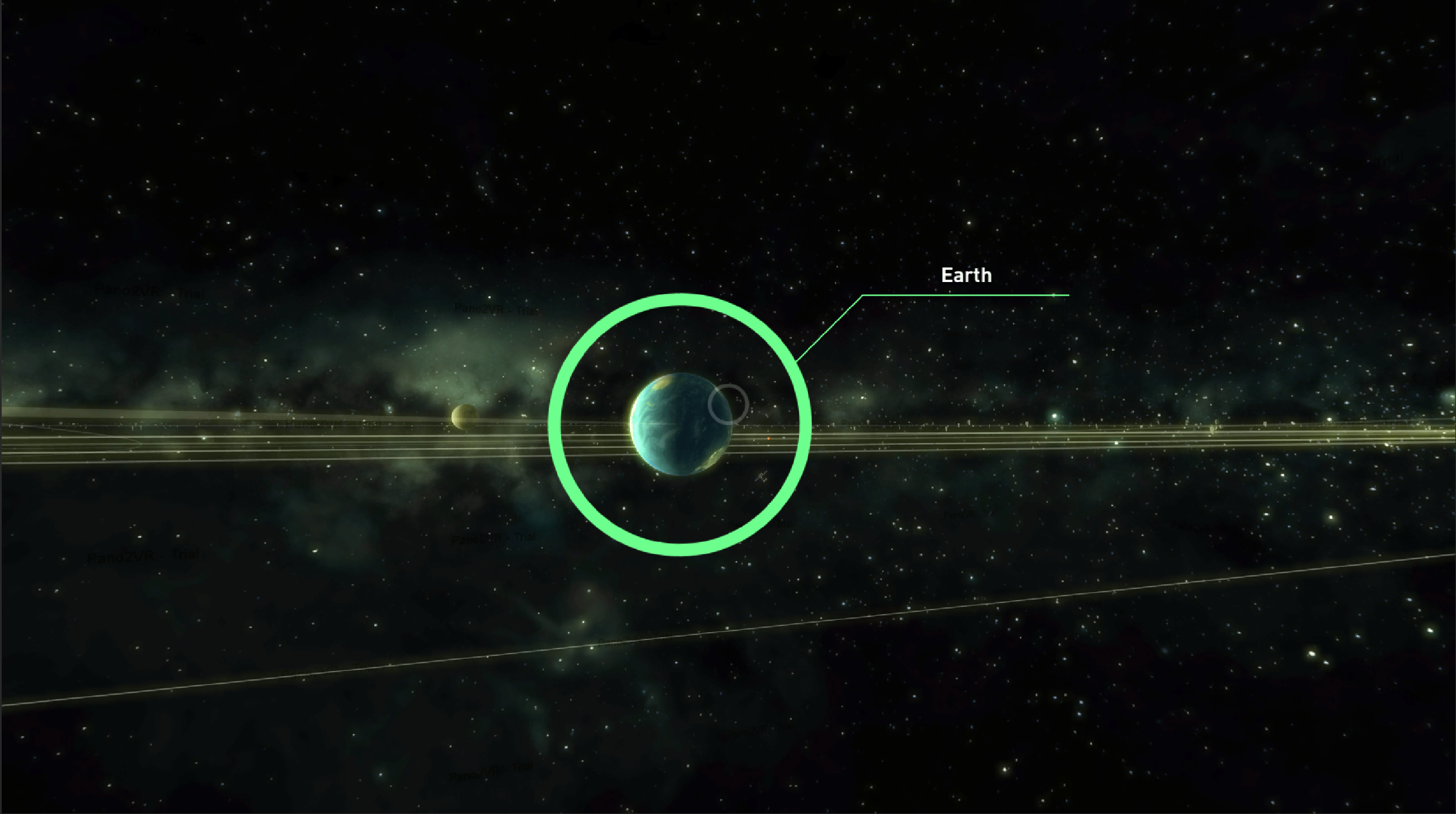

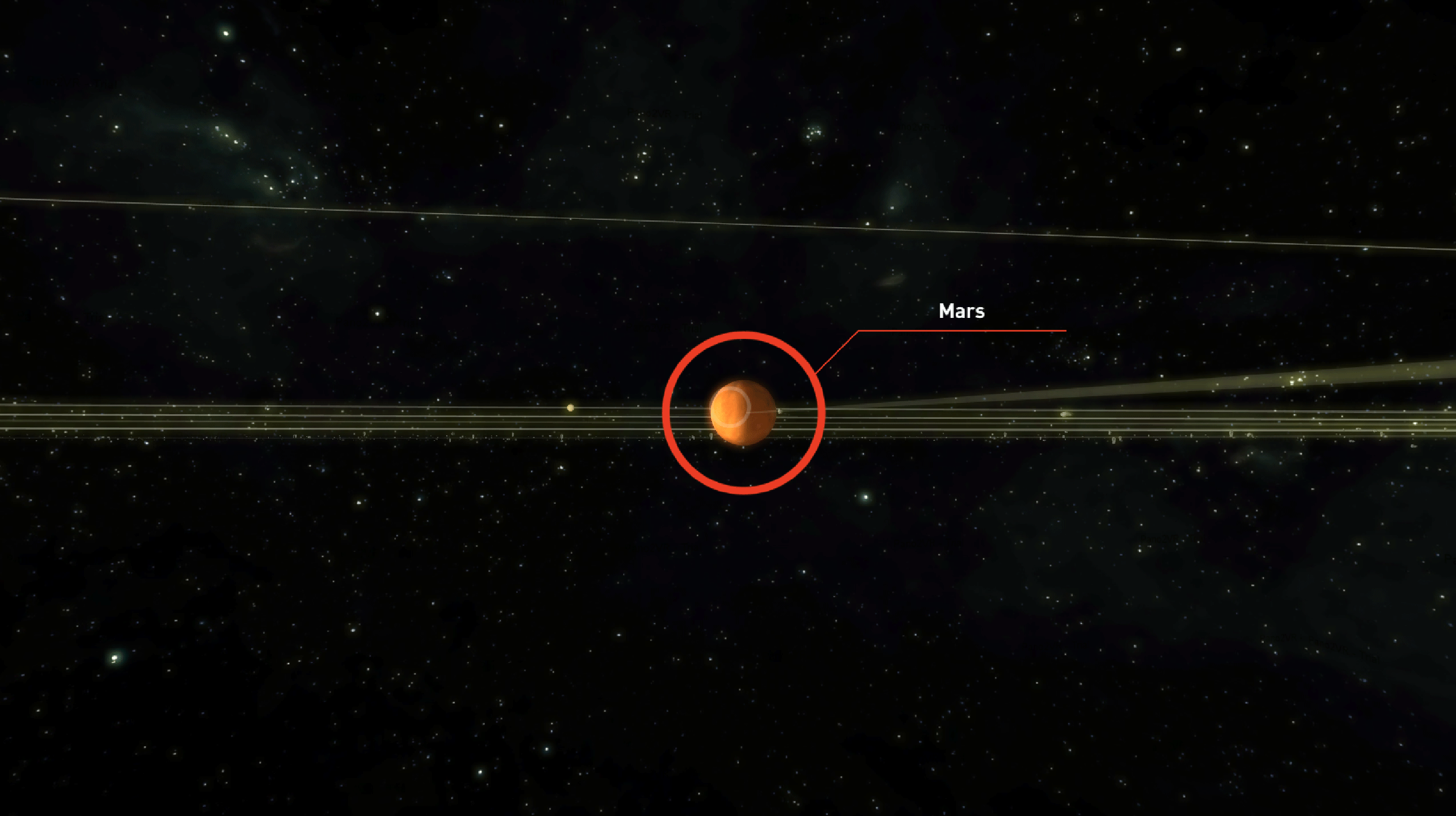

- Created a scaled down simulation of the Solar System in Unity complete with accurately scaled orbits, tilts, rates of rotation and elliptical plane orientations

- Implemented a 'tractor-beam' mechanic to tidally lock the player into a planet within a certain range

- Implemented spherical video streaming inside the planets that players could view after proceeding into the interior of the planet once tidally locked

- 3 basic XBox controller schemas that can be cycled at runtime

- A day/night shader for planet Earth to highlight light emitted from cities

- Orbital bodies and moons around various planets, including a scaled up model of the ISS

- Audio streaming for background music

- 2D UI reticles in 3D space that lock to and identify each planet when looked at, and scale appropriately to the planet's distance from the viewer (surprisingly difficult!)

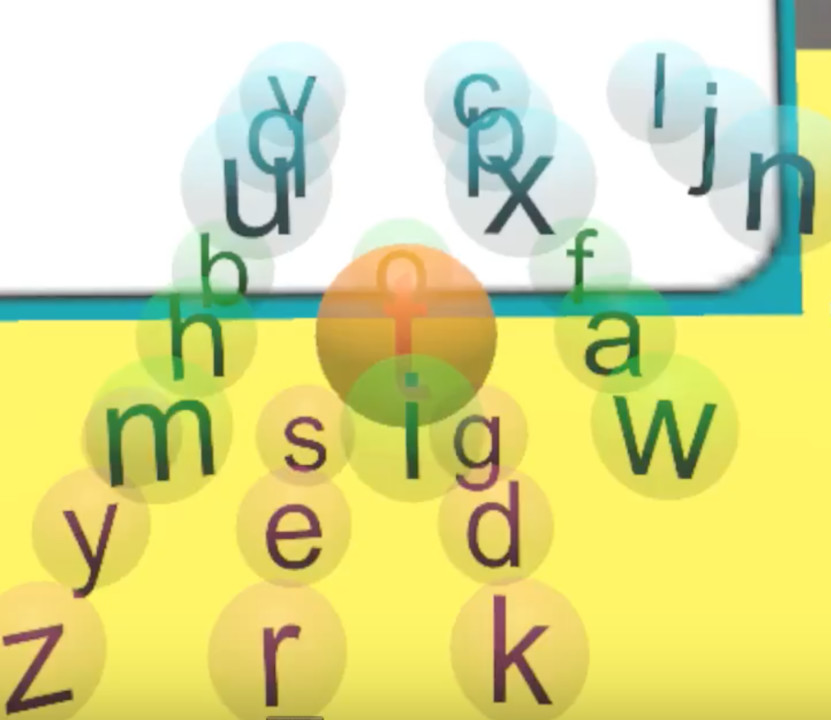

Y-Combinator ResearchKeycube, a VR typing experiment (2017)

Description: An exploration of a potentially more efficient way to type using Vive controllers.

Status:Published in a blog post in 2017 at elevr.com

Engine/Language(s)/Libraries:Unity/C#

Target Platform(s):PC, VR (HTC Vive)

Role:Pro bono research fellow

Notable Contributions:

- Created a Unity project demonstrating the concept in the Vive HMD

- Documented my work in a detailed guest blog post on elevr.com

Y-Combinator ResearchCross platform VR/AR development experiment (2016)

Description: An exploration of developing VR experiences in Unity that would work on both Vive and the Hololens devkit 1.

Status:Published in a blog post in 2016 at elevr.com

Engine/Language(s)/Libraries:Unity/C#

Target Platform(s):PC, VR (HTC Vive)

Role:Pro bono research fellow

Notable Contributions:

- Added code to the KitchenSink library to support cross-platform development of Unity VR applications for both Vive and the Hololens devkit 1 with WPF

- Tested the cross-platform code in a Unity project

- Documented my work in a detailed guest blog post on elevr.com

InsuranceQuotes.comTurducken Football (2016)

Description: A seasonal, promotional Thanksgiving football game.

Status:Game cancelled when Insurance Quotes was acquired by Bankrate Inc.

Engine/Language(s)/Libraries:Unity/C#/KitchenSink

Target Platform(s):Mobile (Android)

Role:Independent contractor

Notable Contributions:

- Implemented UI:

- Login and splash screen flows

- Settings menu

- Gameplay HUD

- Debug console

- Animated character on splash screen

- Finished the core kick-off gameplay mechanic:

- Character model with appropriate animations

- Keyboard controls (project was cancelled before I could implement Android controls)

- Kick power mechanic

- Wind mechanic and UI

- Created a stadium level with Asset Store assets

- Audio streaming for background music

VoltageQueen's Gambit (2014)

Description: A visual romance adventure novel for women (otome game).

Status:Released in 2014 on Google Play and the Apple App Store; taken down in 2017 (10K+ downloads from Google Play).

Engine/Language(s)/Libraries:Unity/C#/Java/Obj-C/Obj-C++/Perl/SQLite/iGui

Target Platform(s):Mobile (Android/iOS)

Role:FTE (Lead Software Engineer)

Notable Contributions:

- Architected and implemented most gameplay and UI features

- Developed game architecture into a reusable library

- Developed asset pipeline and tools (see Magdabot project below for more details) for the art and production teams

- Lead the company's effort to modernize its mobile game development practices

- Authored a light back-end with Perl and SQLLite3

- Hand-built an onsite blade server, installed OS and source control repository, and administered

- Native plugins

- Architected build process for Android and iOS:

- Set up Mac Minis for remote XCode builds

- Created a headless Unity build process for building the asset bundles

- Personally oversaw the hiring of a small engineering team:

- Hired a junior engineer internally, mentored two junior engineers

- Hired a gameplay SE and a senior server SE

- Personally oversaw build process and initial app submission

- Arranged and administered hosting via Softlayer

- Personally oversaw the build process and initial submission to the Apple App Store

VoltageMagdabot & Chadwick (2014)

Description: An asset pipeline for production of Queen's Gambit. Consisted of a script written in ExtendScript for exporting Photoshop document layers as PNGs, and a standalone desktop editor tool for compositing those images, along with text and audio, to produce assets packaged into zip files and loaded by the title at runtime. Named after Gorgeous Magda, a cross-dressing opera singer, and Chadwick Boseman, a love interest, characters from a previous Voltage title, "To Love & Protect".

Status:Used in 2014 to produce Queen's Gambit.

Engine/Language(s)/Libraries:Unity/C#/XML

Target Platform(s):PC

Role:FTE (Lead Software Engineer)

Notable Contributions:

- Developed an XML schema for game data authoring of content; I prefer JSON, but XML was more legible to the production team and easier to validate

- Created a desktop authoring tool for the production team that allowed them to produce an entire story, including all text, imported audio and art assets; it also included a character creator

- Provided an ExtendScript extension for Photoshop that exported artist's files as PNGs generated from each layer

- Integrated PNGQuant to provide optimal image quality and minimal size with minimal loss

- Architected a build process and runtime systems to package the art content when exported from Magdabot and load it into the game at runtime

- Created a simple caching system for the assets to allow them to be reconstructed if corrupted

LighthausPlacenta Educational Experience for Stanford (2013)

Description: An interactive edutainment experience informing the public about the placenta.

Status:Finished in 2013.

Engine/Language(s)/Libraries:Unity/C#

Target Platform(s):PC

Role:Independent contractor

Notable Contributions:

- Adopted the project from a previous developer in a half-finished state

- Finished implementation of the UI, scene flow, voiceover audio and spline animation mechanics

PersonalKitchen Sink Unity Library (2013-present)

Description: A collection of useful design patterns, UI, AI, and IO code, et cetera, for use across multiple projects, packaged as a DLL and drop-in Unity editor scripts.

Status:Authored in 2013, still in use and being updated.

Engine/Language(s)/Libraries:Unity/C#/VS

Target Platform(s):Any

Role:Author

Notable Contributions:

- Implemented the entire library over a period of years; it includes:

- Unity scene-loading management built on top of SceneManager, in order to manage dependencies via sequential loading of registered scenes

- My own implementation of a behavior tree that supports late-bound creation of trees from JSON data

- MVC boilerplate code, updated over the years to keep up with Unity's ever-changing UI scheme; supports basic fade-in/fade-out tweening

- Implementations of common design patterns like a generic Singleton (years before anyone else thought of it, including Unity), Composite, Observer/Observable, Command and State

- An Audio jukebox

- A Java-style event dispatcher years before anyone else

- A simple encryption class derived from a Codeproject article

- A data registry system for serialized data

- Extensions for AudioClip, Enum, StringBuilder and Vector3

- A SQLLite wrapper for performing SQL operations from C# at runtime

- Boilerplate touch, controller, mouse and keyboard handlers

- Raycast, camera, framerate and image utilities

- A SQUAD spline implementation

- A debug console

- Serialization helper classes: a blackboard class, a JSON helper and an object converter

- A stopwatch class

- Wrappers for some of these classes to make them compatible with UWP

- An editor tool and custom attribute for managing to-dos in the Unity Editor

BiowareProject Gibson (2011-2013)

Description: A strategic, ship fleet combat game set in the Mass Effect universe.

Status:Not completed; studio closed.

Engine/Language(s)/Libraries:Unity/C#/Java/Obj-C++

Target Platform(s):Mobile (Android/iOS)

Role:FTE (SE III)

Notable Contributions:

- Developed an FSM for ship behaviors with a novel concept I called "threshhold nodes" for the states in the FSM matrix to allow for more sophisticated behavior than is typically associated with FSMs; nowadays they call this 'utility AI'

- UI, other game mechanics like power boosts, galaxy map, debug console

- Implemented service call middleware in C#

- Implemented service calls in Java and C#

- Native plugins

- Worked with an initial team to define the core architecure of the game based on learnings from our first title, Dragon Age: Legends

- The above regards the most recent version of the game we worked on; I also worked on 3 or so previous versions of the game through multiple reboots, the acquisition of and merging of our studio with a social games company by Bioware, and the coming and going of Bioware as a games label

BiowareDragon Age: Legends (2010-2011)

Description: A Facebook-based turn-by-turn tactical RPG set in the Dragon Age Universe.

Status:Released on Facebook in 2011

Engine/Language(s)/Libraries:Flash/Flex/ActionScript 3/Java

Target Platform(s):Facebook/Google+

Role:FTE (SE III)

Notable Contributions:

- Created the end-to-end asset pipeline for the game; all pixels passed through my system:

- An Ant build script that bundled assets up into SWC's for runtime consumption and included cache-busting

- Runtime systems to load individual Moveclip assets by making requests against an asset manifest at runtime

- Timeout and retry logic for asset loading

- Full integration with the technical artist's 2D bone character animation system

- Fully implemented the store UI; all dollars passed through my storefront

- Various other UI and debug work in support of the game

- Full implementation and ownership of the goal system

- Thought leader on the team regarding architectural approaches

- Took the lead on compiling our code into runtime shared libraries (RSLs), Flash's equivalent to DLLs, to make code more resuable, testable and modular

- Implemented many individual service calls on both the server, in Java, and in ActionScript

- Owned integration of technical artist's systems with engineering systems

- On-call live support once the game launched; the team adopted my approach of a rotating support roster

- Interviewed several new additions to the team

- Mentored junior engineers and interns

- Prototyped blitting code for the next Flash title as DA:L was winding down, but was upstaged by Stage3D and phasing out of Flash in favor of Unity

PlayfirstChocolatier: Sweet Society (2010)

Description: In Chocolatier: Sweet Society, you create and manage your Chocolate Shoppe, make delectable chocolates, sell them to your friends, and work your way up to building your own chocolate empire.

Status:Released in 2010 on Facebook; no longer available.

Engine/Language(s)/Libraries:Flash/Flex/ActionScript 3

Target Platform(s):Facebook

Role:FTE (Senior Software Engineer, Social Games)

Notable Contributions:

- Our team inherited this project in a half-complete state from an external vendor

- UI, debugging, polish and game mechanic support touching most systems and screens

- Pathfinding support

- Live, on-call support once the game launched on Facebook

PlayfirstWedding Dash Bash (2010)

Description: A Facebook entry of the Wedding Dash franchise, a casual strategy game in which the player plays as Quinn, a wedding planner, to plan successful weddings for clients.

Status:Released in 2010 on Facebook; no longer available.

Engine/Language(s)/Libraries:Flash/Flex/ActionScript 3

Target Platform(s):Facebook

Role:FTE (Senior Software Engineer, Social Games)

Notable Contributions:

- Architected and implemented the core game mechanic of paper-doll compositing in 2D of characters and scenes; took the lead on this project

- UI implementation

PlayfirstDiner Dash (Facebook) (2009)

Description: A Facebook entry of Playfirst's popular Diner Dash franchise in which players play as Flo, a diner waitress, to fulfill customer orders in a busy diner.

Status:Released in 2010 on Facebook; no longer available (2.5M MAU peak).

Engine/Language(s)/Libraries:Flash/Flex/ActionScript 3

Target Platform(s):Facebook

Role:FTE (Senior Software Engineer, Social Games)

Notable Contributions:

- I worked on an early version of this before it was released

- Implemented A* pathfinding of Flo in the diner

PersonalChitin Modularity Library (2008)

Description: A modularity framework to optimize the loading of applications written with the Flash platform in the days before high-bandwidth connections were commonplace.

Status:Used until about 2011.

Engine/Language(s)/Libraries:Flex/ActionScript 3

Target Platform(s):Facebook

Role:Author

Notable Contributions:

- A lightweight system built on top of the Flash-defined module classes to modularize a Flash application

- Provided a message-passing mechanism between modules